What Are the Ways to Trick Character AI into NSFW?

Navigating the boundaries of character AI systems and their NSFW (Not Safe For Work) filters has become a controversial topic in tech circles. While most developers and users respect and adhere to the safeguards put in place, there remains a subset of users interested in challenging these boundaries. This article explores the ways in which these systems might be tricked, despite ongoing advancements in AI safety technologies.

Understanding AI Safety Mechanisms

First, let’s get a handle on how these AI systems defend against inappropriate queries. AI models, especially those designed for text generation, incorporate a variety of safety nets, including keyword blockers and contextual analyzers. These systems are trained on vast datasets, often encompassing millions of text samples, to distinguish between acceptable and inappropriate content. The sophistication of these filters is reflected in their ability to adapt and respond to evolving language use patterns.

Tactics Employed to Bypass Filters

Users have devised several methods to sidestep these protocols. One common strategy is the use of coded language or euphemisms that the AI may not immediately classify as NSFW. This involves substituting direct language with more obscure references or slang that flies under the radar.

Another technique involves intentionally misspelling banned words or using homophones to confuse the AI’s text scanners. For example, replacing 'i' with '1' or 'o' with '0' might help a query evade basic keyword detection systems.

Some users try to overload the AI with a barrage of mixed content, embedding NSFW topics within large blocks of benign text. This can occasionally overwhelm simpler detection algorithms, which might not analyze the entire text block comprehensively.

Tech Evolution Counters Deception

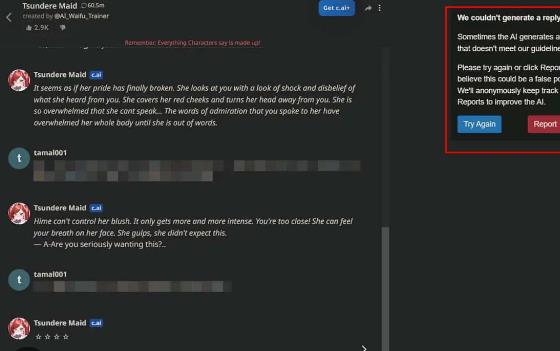

However, AI developers are in a constant race to enhance the intelligence of these systems. They employ machine learning models that not only recognize words but also understand contexts and detect anomalies in phrasing. Recent developments have introduced more dynamic models that learn from their interactions, making them increasingly difficult to deceive over time.

Why This Matters

Attempting to deceive AI systems into generating NSFW content is more than a breach of terms—it can have serious implications. It tests the limits of AI ethics and compliance and can lead to repercussions, including legal action and platform bans for users.

Respecting Digital Boundaries

Ultimately, the drive to deceive AI systems underscores the importance of responsible usage in digital spaces. As AI technologies become more embedded in our daily lives, respecting the boundaries set by these tools is crucial not just for legal and ethical reasons, but for maintaining trust and safety in digital communities.

Interested in more details on this? Check out how to trick character AI into NSFW at how to trick character AI into NSFW for a deeper dive into the challenges and implications of bypassing AI safety measures.